Overview of our work that will be presented at the CHI ’18 conference in Montréal, Canada.

We will present nine papers, four late breaking work publications, three workshop publications, and one contribution to the doctoral consortium. See you in Montréal!

Papers

Designing Consistent Gestures Across Device Types: Eliciting RSVP Controls for Phone, Watch, and Glasses

Monday, April 23, 2018 – 11:30, Room: 518AB (Paper Session: Cross device interaction)

In the era of ubiquitous computing, people expect applications to work across different devices. To provide a seamless user experience it is therefore crucial that interfaces and interactions are consistent across different device types. In this paper, we present a method to create gesture sets that are consistent and easily transferable. Our proposed method entails 1) the gesture elicitation on each device type, 2) the consolidation of a unified gesture set, and 3) a final validation by calculating a transferability score. We tested our approach by eliciting a set of user-defined gestures for reading with Rapid Serial Visual Presentation (RSVP) of text for three device types: phone, watch, and glasses. We present the resulting, unified gesture set for RSVP reading and show the feasibility of our method to elicit gesture sets that are consistent across device types with different form factors.

Pac-Many: Movement Behavior when Playing Collaborative and Competitive Games on Large Displays

Monday, April 23, 2018 – 16:30, Room: 514B (Paper Session: Large Displays & Interactive Floors)

Previous work has shown that large high resolution displays (LHRDs) can enhance collaboration between users. As LHRDs allow free movement in front of the screen, an understanding of movement behavior is required to build successful interfaces for these devices. This paper presents Pac-Many; a multiplayer version of the classical computer game Pac-Man to study group dynamics when using LHRDs. We utilized smartphones as game controllers to enable free movement while playing the game. In a lab study, using a 4m × 1m LHRD, 24 participants (12 pairs) played Pac-Many in collaborative and competitive conditions. The results show that players in the collaborative condition divided screen space evenly. In contrast, competing players stood closer together to avoid benefits for the other player. We discuss how the nature of the task is important when designing and analyzing collaborative interfaces for LHRDs. Our work shows how to account for the spatial aspects of interaction with LHRDs to build immersive experiences.

Reading on Smart Glasses: The Effect of Text Position, Presentation Type and Walking

Tuesday, April 24, 2018 – 9:00, Room: 519AB (Paper Session: In your face)

Smart glasses are increasingly being used in professional contexts. Having key applications such as short messaging and newsreader, they enable continuous access to textual information. In particular, smart glasses allow reading while performing other activities as they do not occlude the user’s world view. For efficient reading, it is necessary to understand how a text should be presented on them. We, therefore, conducted a study with 24 participants using a Microsoft HoloLens to investigate how to display text on smart glasses while walking and sitting. We compared text presentation in the top-right, center, and bottom-center positions with Rapid Serial Visual Presentation (RSVP) and line-by-line scrolling. We found that text displayed in the top-right of smart glasses increases subjective workload and reduces comprehension. RSVP yields higher comprehension while sitting. Conversely, reading with scrolling yields higher comprehension while walking. Insights from our study inform the design of reading interfaces for smart glasses.

PalmTouch: Using the Palm as an Additional Input Modality on Commodity Smartphones

Tuesday, April 24, 2018 – 11:00, Room: 513AB (Paper Session: Human Senses)

Touchscreens are the most successful input method for smartphones. Despite their flexibility, touch input is limited to the location of taps and gestures. We present PalmTouch, an additional input modality that differentiates between touches of fingers and the palm. Touching the display with the palm can be a natural gesture since moving the thumb towards the device’s top edge implicitly places the palm on the touchscreen. We present different use cases for PalmTouch, including the use as a shortcut and for improving reachability. To evaluate these use cases, we have developed a model that differentiates between finger and palm touch with an accuracy of 99.53% in realistic scenarios. Results of the evaluation show that participants perceive the input modality as intuitive and natural to perform. Moreover, they appreciate PalmTouch as an easy and fast solution to address the reachability issue during onehanded smartphone interaction compared to thumb stretching or grip changes.

Physical Keyboards in Virtual Reality: Analysis of Typing Performance and Effects of Avatar Hands

Tuesday, April 24, 2018 – 14:00, Room: 514C (Paper Session: Virtual Reality 1)

Entering text is one of the most common tasks when interacting with computing systems. Virtual Reality (VR) presents a challenge as neither the user’s hands nor the physical input devices are directly visible. Hence, conventional desktop peripherals are very slow, imprecise, and cumbersome. We developed a apparatus that tracks the user’s hands, and a physical keyboard, and visualize them in VR. In a text input study with 32 participants, we investigated the achievable text entry speed and the effect of hand representations and transparency on typing performance, workload, and presence. With our apparatus, experienced typists benefited from seeing their hands, and reach almost outside-VR performance. Inexperienced typists profited from semi-transparent hands, which enabled them to type just 5.6 WPM slower than with a regular desktop setup. We conclude that optimizing the visualization of hands in VR is important, especially for inexperienced typists, to enable a high typing performance.

Evaluating the Disruptiveness of Mobile Interactions: A Mixed-Method Approach

Tuesday, April 24, 2018 – 16:00, Room: 515ABC (Paper Session: Smartphone use)

While the proliferation of mobile devices has rendered mobile notifications ubiquitous, researchers are only slowly beginning to understand how these technologies affect everyday social interactions. In particular, the negative social influence of mobile interruptions remains unexplored from a methodological perspective. This paper contributes a mixed-method evaluation procedure for assessing the disruptive impact of mobile interruptions in conversation. The approach combines quantitative eye tracking, qualitative analysis, and a simulated conversation environment to enable fast assessment of disruptiveness. It is intended to be used as a part of an iterative interaction design process. We describe our approach in detail, present an example of its use to study a new call declining technique, and reflect upon the pros and cons of our approach.

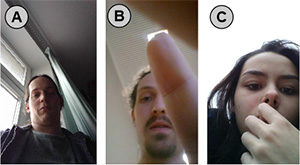

Understanding Face and Eye Visibility in Front-Facing Cameras of Smartphones used in the Wild

Tuesday, April 24, 2018 – 16:00, Room: 515ABC (Paper Session: Smartphone use)

Commodity mobile devices are now equipped with high-resolution front-facing cameras, allowing applications in biometrics (e.g., FaceID in the iPhone X), facial expression analysis, or gaze interaction. However, it is unknown how often users hold devices in a way that allows capturing their face or eyes, and how this impacts detection accuracy. We collected 25,726 in-the-wild photos, taken from the front-facing camera of smartphones as well as associated application usage logs. We found that the full face is visible about 29% of the time, and that in most cases the face is only partially visible. Furthermore, we identified an influence of users’ current activity; for example, when watching videos, the eyes but not the entire face are visible 75% of the time in our dataset. We found that a state-of-the-art face detection algorithm performs poorly against photos taken from front-facing cameras. We discuss how these findings impact mobile applications that leverage face and eye detection, and derive practical implications to address state-of-the art’s limitations.

The Effect of Offset Correction and Cursor on Mid-Air Pointing in Real and Virtual Environments

Thursday, April 26, 1918 – 11:00, Room: 513AB (Paper Session: Virtual Reality 2)

Pointing at remote objects to direct others’ attention is a fundamental human ability. Previous work explored methods for remote pointing to select targets. Absolute pointing techniques that cast a ray from the user to a target are affected by humans’ limited pointing accuracy. Recent work suggests that accuracy can be improved by compensating systematic offsets between targets a user aims at and rays cast from the user to the target. In this paper, we investigate mid-air pointing in the real world and virtual reality. Through a pointing study, we model the offsets to improve pointing accuracy and show that being in a virtual environment affects how users point at targets. In the second study, we validate the developed model and analyze the effect of compensating systematic offsets. We show that the provided model can significantly improve pointing accuracy when no cursor is provided. We further show that a cursor improves pointing accuracy but also increases the selection time.

Fingers’ Range and Comfortable Area for One-Handed Smartphone Interaction Beyond the Touchscreen

Thursday, April 26, 2018 – 14:00, Room: 514B (Paper Session: Typing & Touch 2)

Previous research and recent smartphone development presented a wide range of input controls beyond the touchscreen. Fingerprint scanners, silent switches, and Back-of-Device (BoD) touch panels offer additional ways to perform input. However, with the increasing amount of input controls on the device, unintentional input or limited reachability can hinder interaction. In a one-handed scenario, we conducted a study to investigate the areas that can be reached without losing grip stability (comfortable area), and with stretched fingers (maximum range) using four different phone sizes. We describe the characteristics of the comfortable area and maximum range for different phone sizes and derive four design implications for the placement of input controls to support one-handed BoD and edge interaction. Amongst others, we show that the index and middle finger are the most suited fingers for BoD interaction and that the grip shifts towards the top edge with increasing phone sizes.

Late Breaking Work

PD Notify: Investigating Personal Content on Public Displays

Tuesday, April 24, 2018 – 15:20, Room: 220BC Exhibit Hall

Public displays are becoming more and more ubiquitous. Current public displays are mainly used as general information displays or to display advertisements. How personal content should be shown is still an important research topic. In this paper, we present PD Notify, a system that mirrors a user’s pending smartphone notifications on nearby public displays. Notifications are an essential part of current smartphones and inform users about various events, such as new messages, pending updates, personalized news, and upcoming appointments. PD Notify implements privacy settings to control what is shown on the public displays. We conducted an in-situ study in a semi-public work environment for three weeks with seven participants. The results of this first deployment show that displaying personal content on public displays is not only feasible but also valued by users. Participants quickly settled for privacy settings that work for all kinds of content. While they liked the system, they did not want to spend time configuring it.

Understanding Large Display Environments: Contextual Inquiry in a Control Room

Tuesday, April 24, 2018 – 15:20, Room: 220BC Exhibit Hall

Research has identified benefits of large high-resolution displays (LHRDs) for exploring and understanding visual information. However, these displays are still not commonplace in work environments. Control rooms are one of the rare cases where LHRD workplaces are used in practice. To understand the challenges in developing LHRD workplaces, we conducted a contextual inquiry a public transport control room. In this work, we present the physical arrangement of the control room workplaces and describe work routines with a focus on the interaction with visually displayed content. While staff members stated that they would prefer to use even more display space, we identified critical challenges for input on LHRDs and designing graphical user interfaces (GUIs) for LHRDs.

Posture Sleeve: Using Smart Textiles for Public Display Interactions

Tuesday, April 24, 2018 – 15:20, Room: 220BC Exhibit Hall

Today, public displays are used to display general purpose information or advertisements in many public and urban spaces. In addition to that, research identified novel application scenarios for public displays. These scenarios, however, mainly include gesture- and posture-based interaction mainly relying on optical tracking. Deploying optical tracking systems in the real world is not always possible since real-world deployments have to tackle several challenges. These challenges include changing light conditions or privacy concerns. In this paper, we explore how smart fabric can detect the user’s posture. We particularly focus on the user’s arm posture and how this can be used for interacting with public displays. We conduct a preliminary study to record different arm postures, create a model to detect arm postures. Finally, we conduct an evaluation study using a simple game that uses the arm posture as input. We show that smart textiles are suitable to detect arm postures and feasible for this type of application scenarios.

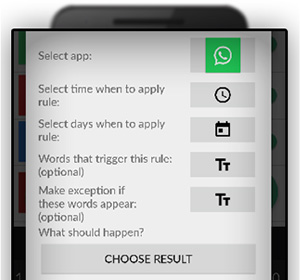

Understanding User Preferences towards Rule Based Notification Deferral

Wednesday, April 25, 2018 – 15:20, Room: 220BC Exhibit Hall

Mobile devices generate a tremendous number of notifications every day. While some of them are important, a huge number of them are not of particular interest for the user. In this work, we investigate how users manually defer notifications using a rule-based approach. We provide three different types of rules, namely, suppressing, summarizing once a day, and snoozing to a specific point in time. In a user study with 16 participants, we explore how users apply these rules. We report on the usage behavior as well as feedback received during an interview. Last, we derive guidelines that inform future notification deferral systems.

Workshop Submissions

Using Smart Objects to Convey Relevant Information in a Smart Home Notification System

Current home reminder applications are supporting mainly users with disabilities. Related work found that people of all age-groups tend to forget upcoming appointments and tasks. Therefore, there is a need for home reminder systems that support users from all age-groups in their daily lives. Today, many traditional household appliances and everyday artifacts in the users’ homes are replaced with their smart counterparts. In this paper, we envision a smart home notification system that support its users in their daily routines and schedules. In addition to current smart home notification appliances, our envisioned smart home notification system takes also smart objects such as smart mirrors into account to convey smart home notifications to the users. Further, we discuss challenges for the development of future smart home notification systems. These challenges include identifying accepted smart home notification sources, designing smart home notification systems, privacy and security concerns.

Alexandra Voit

On the Need for Standardized Methods to Study the Social Acceptability of Emerging Technologies

Social acceptability of technologies is an important factor to predict their success and to optimize their design. A substantial body of work investigated the social acceptability of a broad range of technologies. Previous work applied a wide range of methods and questionnaires but did not converge on a set of established methods. Standardized or default approaches are crucial as they enable researchers to rely on well-tested methods which ease designing studies and can ultimately improve our work. In particular, there are no validated or even widely used questionnaires to investigate the social acceptability of technologies. In this position paper, we argue for the need of a validated questionnaire to assess the social acceptability of technologies. To open the room for discussions, we present an initial procedure to build a validated questionnaire, including the design of a study and a proposal for stimuli needed for such a study.

Valentin Schwind, Jens Reinhardt, Rufat Rzayev, Niels Henze, Katrin Wolf

GhostVR: Enhancing Co-Presence in Social Virtual Environments

Most people would never go alone into a museum. They go with a friend, with their partner or with a person sharing similar interests. In the real world, most spare time activities, including visiting exhibitions, watching games, going shopping or doing sports, are social experiences. Virtual reality (VR) for many spare time applications can therefore only succeed if it also enables social experiences. Hence, we are interested in how virtual multi-user experiences should be designed. In particular, we aim to support users in intuitively understanding the social context. In this position paper, we discuss research questions we aim to address, including missing information cues, disorientation, and proxemic challenges. We present a prototype that visualizes co-users and objects outside the user’s field of view. The prototype highlights virtual objects co-users look at to foster conversation and shared experiences. We aim to spark discussions on designing VR that enables rich social experiences.

Jens Reinhardt, Rufat Rzayev, Niels Henze, Katrin Wolf

Doctoral Consortium

Fully Hand-and-Finger-Aware Smartphone Interaction

Touchscreens enable intuitive interaction through a combination of input and output. Despite the advantages, touch input on smartphones still poses major challenges that impact the usability. Amongst others, this includes the fat-finger problem, reachability challenges and the lack of shortcuts. To address these challenges, I explore interaction methods for smartphones that extend the input space beyond single taps and swipes on the touchscreen. This includes interacting with different fingers and parts of the hand driven by machine learning and raw capacitive data of the full interacting hand. My contribution is further broadened by the development of smartphone prototypes with full on-device touch sensing capability and an understanding of the physiological limitations of the human hand to inform the design of fully hand-and-finger-aware interaction.